From Scripts to Agents: Understanding the Evolution of AI

Everyone's talking about agentic AI, but what actually makes an agent different from ChatGPT? Here's the evolution from scripts to agents, and why this distinction matters now.

We're at a fascinating moment. The technology that will define the next decade of work is emerging right now, and most people haven't quite connected the dots yet.

We're standing at the edge of a transformation as significant as the internet itself, but the conversation hasn't caught up to the reality.

So let's clear this up: What exactly is an agent?

My First Real Agent Experience

The first time I watched an agent take control of my browser and act as me, something clicked. This wasn't ChatGPT giving me an answer I could copy and paste. This was software making decisions about what to click, where to navigate, how to interact with websites on my behalf.

It felt different. Not just smarter—more autonomous.

I have my own Turing test for agents: can it order a pizza for me? Not "tell me how to order a pizza" or "write the API call for a pizza ordering system." Actually order the pizza. Navigate to a restaurant website, handle the UI, choose options, complete payment, confirm delivery.

I've tried this several times. It hasn't successfully done it yet. But the fact that we're even attempting this tells you something about where we are. Once that works reliably, we're in a fundamentally different world.

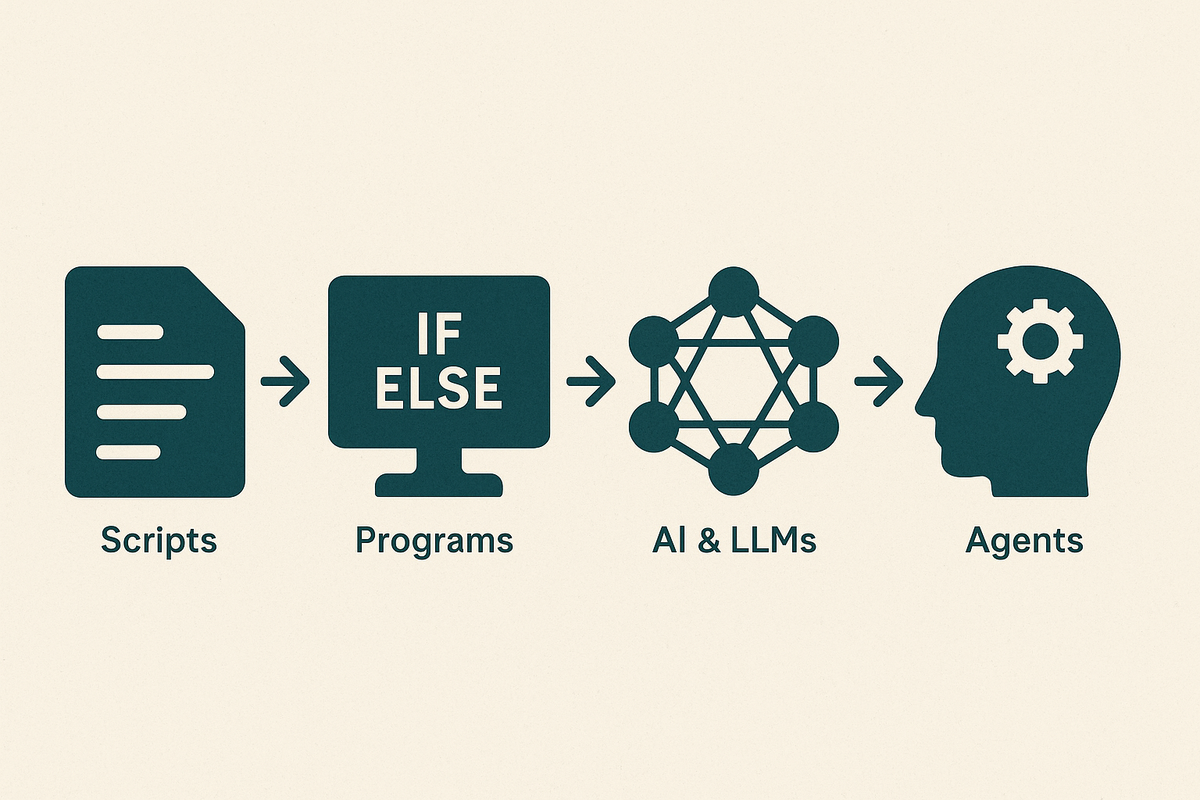

The Evolution: Where Agents Fit

To understand agents, you need to see the progression. This isn't a rigorous computer science taxonomy, but a framework for seeing the pattern of increasing capability and autonomy.

Procedures & Scripts: Follow These Steps

In the beginning, we had procedures: step-by-step instructions. Input goes in, the computer follows your exact instructions, output comes out. PHP started as a scripting language. Bash scripts automate tasks. These are recipes.

Programs: Make Decisions

Programs added conditional logic. The ability to look at a situation and branch. If this condition exists, do that. If not, do something else. The distinction from scripts can be blurry (even scripts can have if statements), but programs represented a leap in architectural sophistication and capability.

Algorithms: Optimize Solutions

Algorithms are about finding the best way to solve specific problems. Sorting data efficiently. Finding the shortest path. Calculating optimal outcomes. This is problem-solving with mathematical elegance.

AI & LLMs: Understand and Generate

Then came artificial intelligence, and more recently, Large Language Models like ChatGPT. These systems understand natural language, recognize patterns, and generate human-like responses. They can write, analyze, explain, create. This felt like magic when it arrived.

Agents: Act Autonomously to Achieve Goals

And now we arrive at agents.

Here's the crucial distinction, and this is what I keep trying to explain to clients and colleagues:

An LLM responds. An agent acts.

When you use ChatGPT, you ask a question, it gives you an answer. One interaction. You're still doing the work.

When you deploy an agent, you give it a goal and it figures out how to achieve it. It makes plans. It uses tools. It takes actions. It checks whether those actions worked. It adjusts its approach. It keeps going until the goal is accomplished.

Someone recently told me that agents have "the ability to perceive." That stuck with me. In traditional programming, variables are passed in as input. An agent goes out and finds its own input. It observes. It has initiative.

That's closer to original thought than anything we've built before.

Automation vs. Intelligence: Know the Difference

Here's where a lot of confusion happens. People hear "agent" and think "automation." But these are fundamentally different:

Automation is a fixed workflow with predetermined steps. If this happens, do that. It's sophisticated and useful, but it's not adaptive. You're essentially building a very specific procedure that handles known scenarios.

An agent is given a goal and figures out how to achieve it. It doesn't follow a script. It decides what tools to use, what information to gather, what steps to take. It adapts when things don't work as expected.

The question to ask: Do you want an automated process, or do you want an intelligent process?

If your need is repetitive and well-defined, automation might be perfect. If your need involves variability, judgment, or complex multi-step problem solving, you want an agent.

Why This Matters Now

I write about this partly because teaching helps me learn. I'm building with agents. I'm consulting on them. But mostly, I want to spread knowledge and help others understand what's actually happening here.

Because AI in general, and agentic AI specifically, represents possibly the most profound change in human civilization. I'm not being dramatic. Many people who work in this space feel the same way. It's an amazing thing to be a part of, and I want more people to understand it clearly.

Right now, there's general fuzziness about what an agent actually is. The technology exists. The infrastructure is being built. But widespread understanding hasn't caught up.

Agents can:

- Navigate multiple systems and tools

- Break down complex goals into tasks

- Execute those tasks across different platforms

- Learn from results and adjust their approach

- Work asynchronously while you focus elsewhere

This is happening now. Not in five years. Now.

The Bottom Line

If you remember one thing from this article, remember this:

Agents don't just respond to what you ask. They pursue what you want achieved.

That's the difference. That's the leap.

The technology has evolved from "follow these steps" to "make this decision" to "understand this context" to "achieve this goal."

When computers move from executors to achievers, everything changes.

Welcome to the age of agents.

Comments ()